Advanced artificial intelligence models can already churn out computer code and help discover new pharmaceuticals, but when it comes to identifying simple objects, they might still have something to learn from the humble rat. Those are the conclusions drawn from a paper published this week in the journal Patterns, where researchers from Scuola Internazionale Superiore di Studi Avanzati (SISSA) in Italy tasked an image recognition model with trying to replicate rats’ ability to recognize objects that were rotated, resized, and partially obscured. .

The AI model was able to eventually match the rats’ image processing capabilities, but only after using more and more resources and computer power to catch up. Though identifying objects in their original position was easy for both the AI and the rat, researchers had to boost the model’s performance in order to match the rats processing capabilities when identifying objects that were altered in various ways. Researchers say their findings suggest that rat vision, fine-tuned over millions of years of evolution, is still more efficient than even powerful image recognition systems.

Rats vision appears uniquely ‘efficient and adaptable’

Rat vision differs from the way humans see in several notable ways. For starters, like many mammals, rats’ eyes are positioned on the side of their head. This gives them a wider visual field of view, which is useful in the wild for spotting and avoiding predators. Maybe more bizarrely, past research has shown rat eyes also move in opposite directions from one another depending on the orientation of its head. This results in their rats appearing “cross eyed” when they point their heads downward. The rats, in this experiment, were trained using treats to identify objects shown on a monitor. They then triggered a touch sensor when they identified the target object.

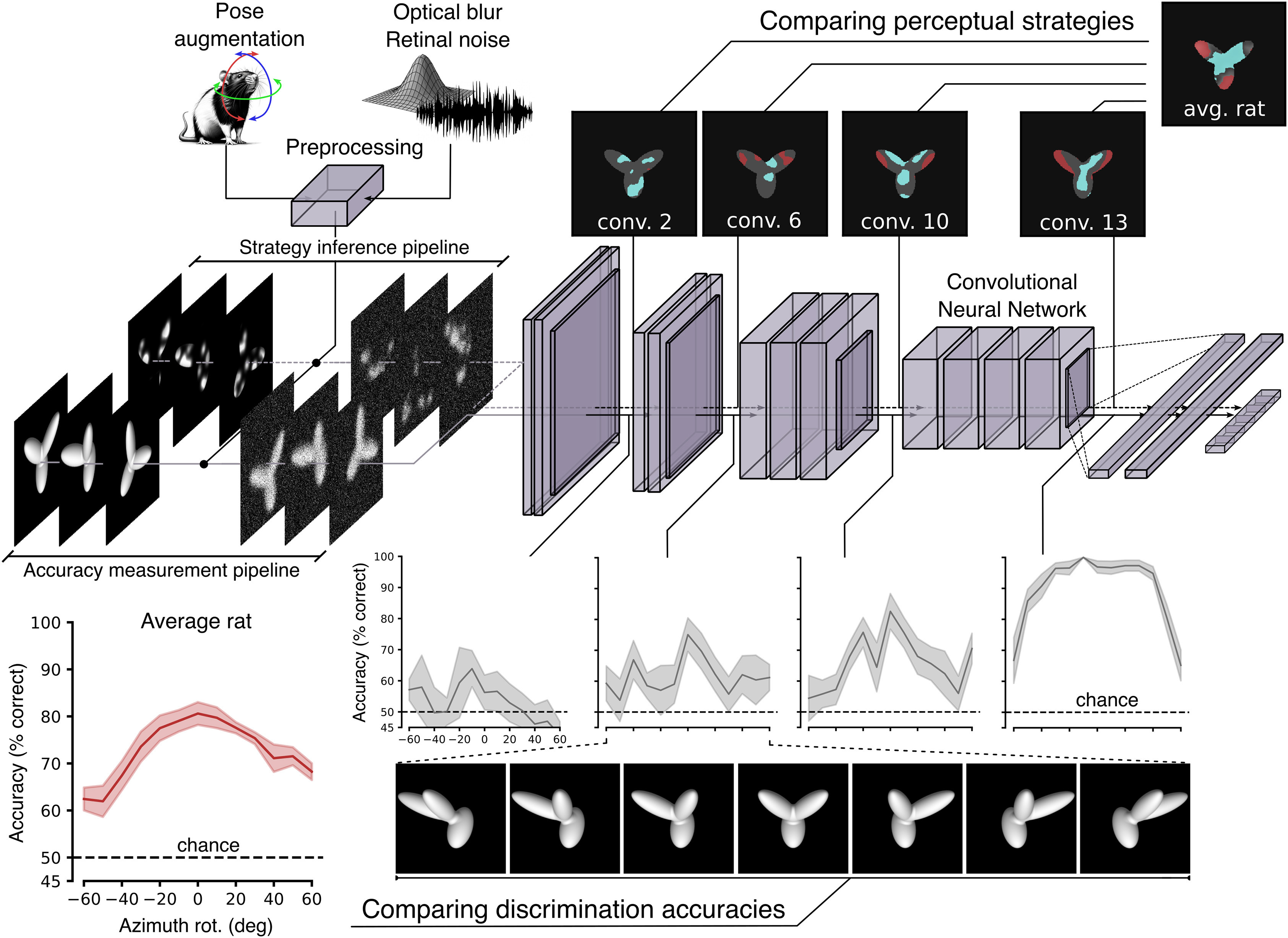

To see how this rat vision stacked up against AI, the SISSA researchers created a “convolutional neural network” (CNN). This type of deep learning model, widely considered as among the most advanced AI systems for image recognition by engineers, was itself modeled in part after mammalian visual cortexes. CNN’s use a layer-based system to identify objects. The initial, most rudimentary base can process and identify simple features like edges and contrasts. New layers are added on top in order to identify increasingly more complex types of images. Each additional layer requires more resources and computer power to work. It’s almost like a towering lasagna that needs more ingredients to become taller and denser.

That CNN model was then tasked with replicating test rats’ ability to recognize objects in various conditions. At the most basic level—identifying an object that was unobstructed and in its normal position—both the rats and the AI nailed it. In that case, the AI model only needed to use its first layer. But that changed as the tasks got more difficult. When the objects were rotated or resized, the CNN model needed to add more layers and more resources. The rats, on the hands, kept identifying the objects consistently when they were transformed and even spotted them when they were partially obstructed, something the AI struggled with. Rat vision, the researchers concluded, seemed generally more flexible and adaptable than AI image recognition.

“Rats, often considered poor models of vision, actually display sophisticated abilities that force us to rethink the potential of their visual system and, simultaneously, the limitations of artificial neural networks,” SISSA neuroscientist and paper author Davide Zoccolan said in a statement. “This suggests that they could be a good model for studying human or primate visual capabilities, which have a highly developed visual cortex, even compared to artificial neural networks, which, despite their success at replicating human visual performance, often do so using very different strategies.”

AI still has much to learn before it should really by considered ‘superintelligent’

The rat vision study should serve as a helpful reminder that powerful AI models are indeed impressive at some specific tasks, but they aren’t infallible. Late last year, OpenAI CEO Sam Altman released a manifesto-like blog post saying the world may experience AI “superintelligence” sometime in a “few thousand days.” Billionaire Elon Musk has similarly said superintelligent AI will likely come this year.

But what do those benchmarks really mean? Yes, large language models have already outperformed some humans in standardized tests for medical and law schools. (AI still can’t make an official medical diagnosis without a doctor and professional lawyers have been fined and suspended for introducing AI-generated legal briefs that included fabricated facts). At the same time Advanced AI systems implemented in bipedal robots also still often have trouble balancing. And, as the SISSA research suggests, AI seemingly struggles to match the visual acuity of rats. In other words AI still has much to learn, both from humans and animals.