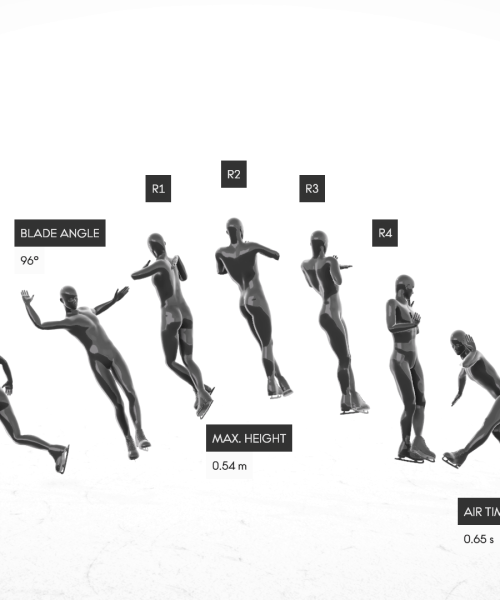

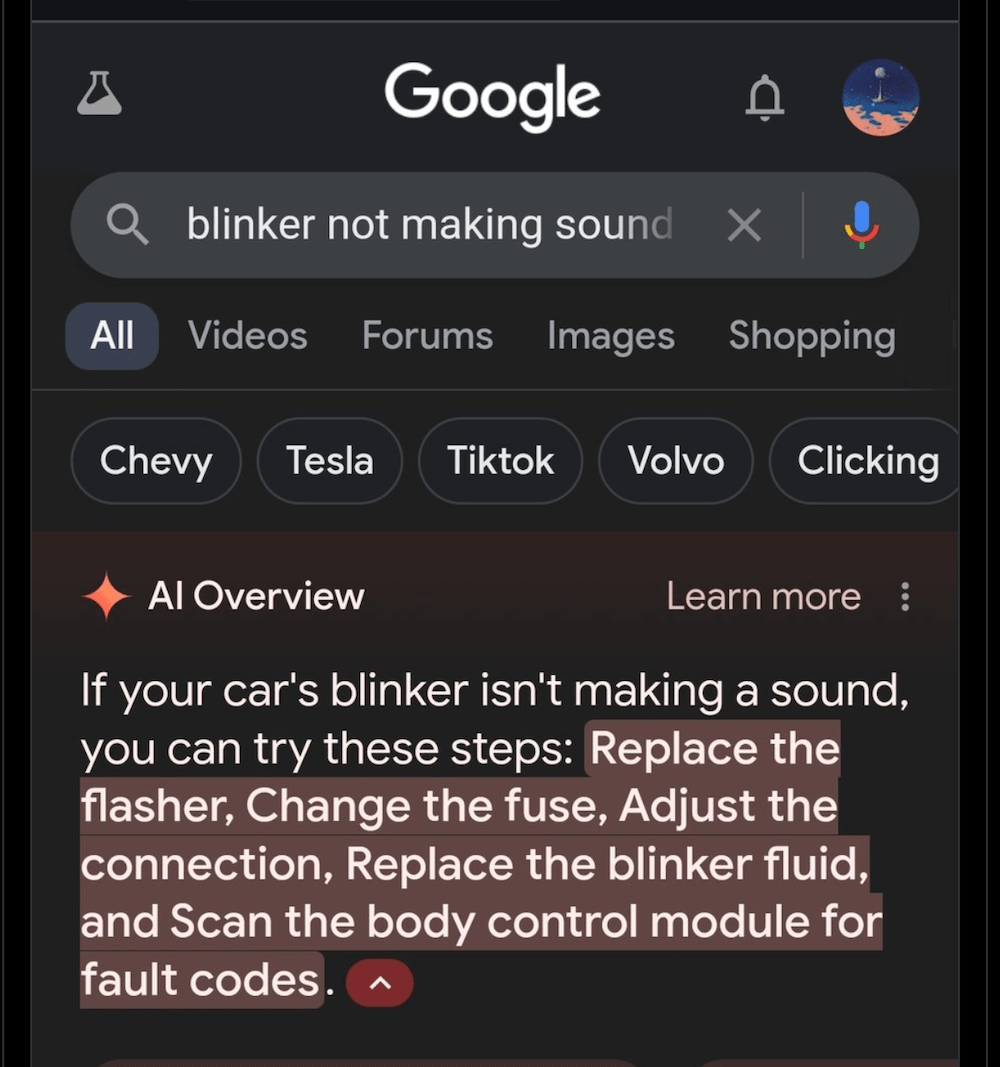

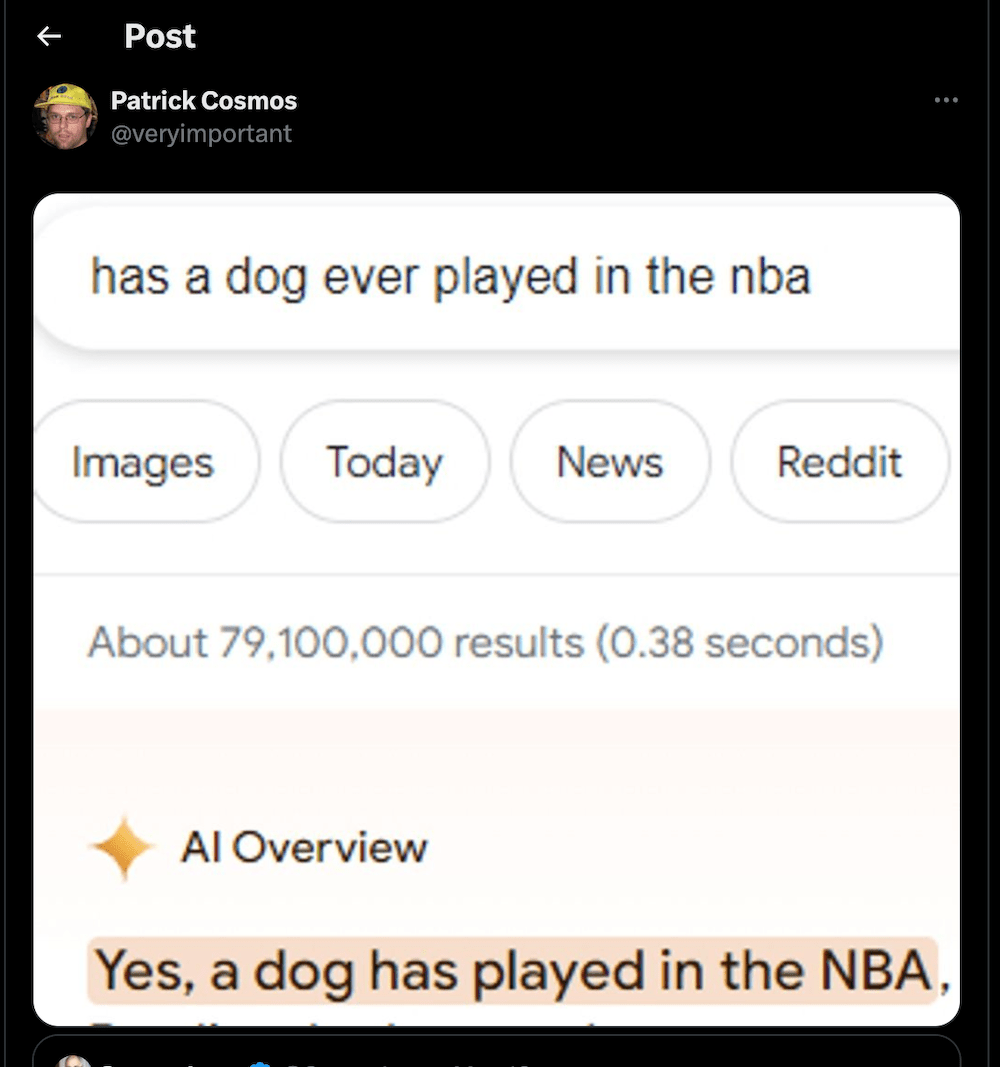

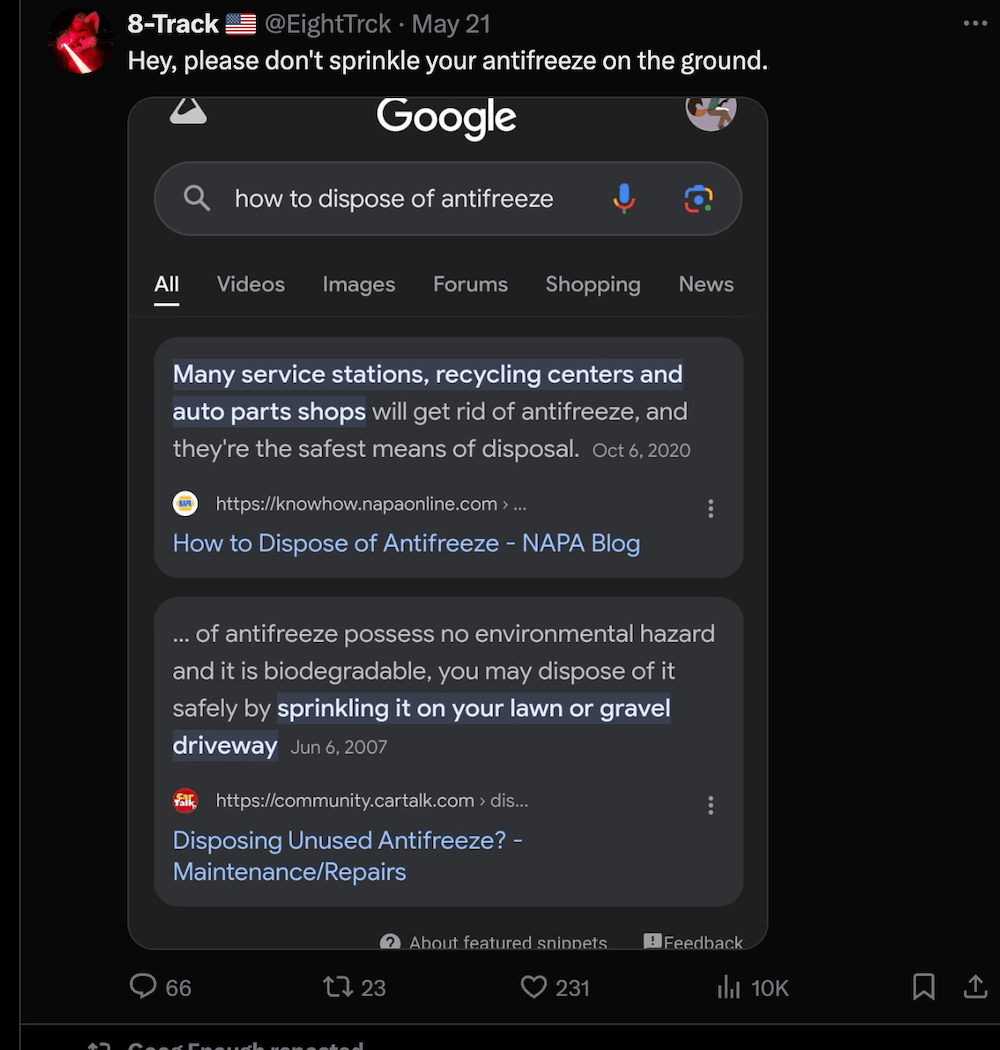

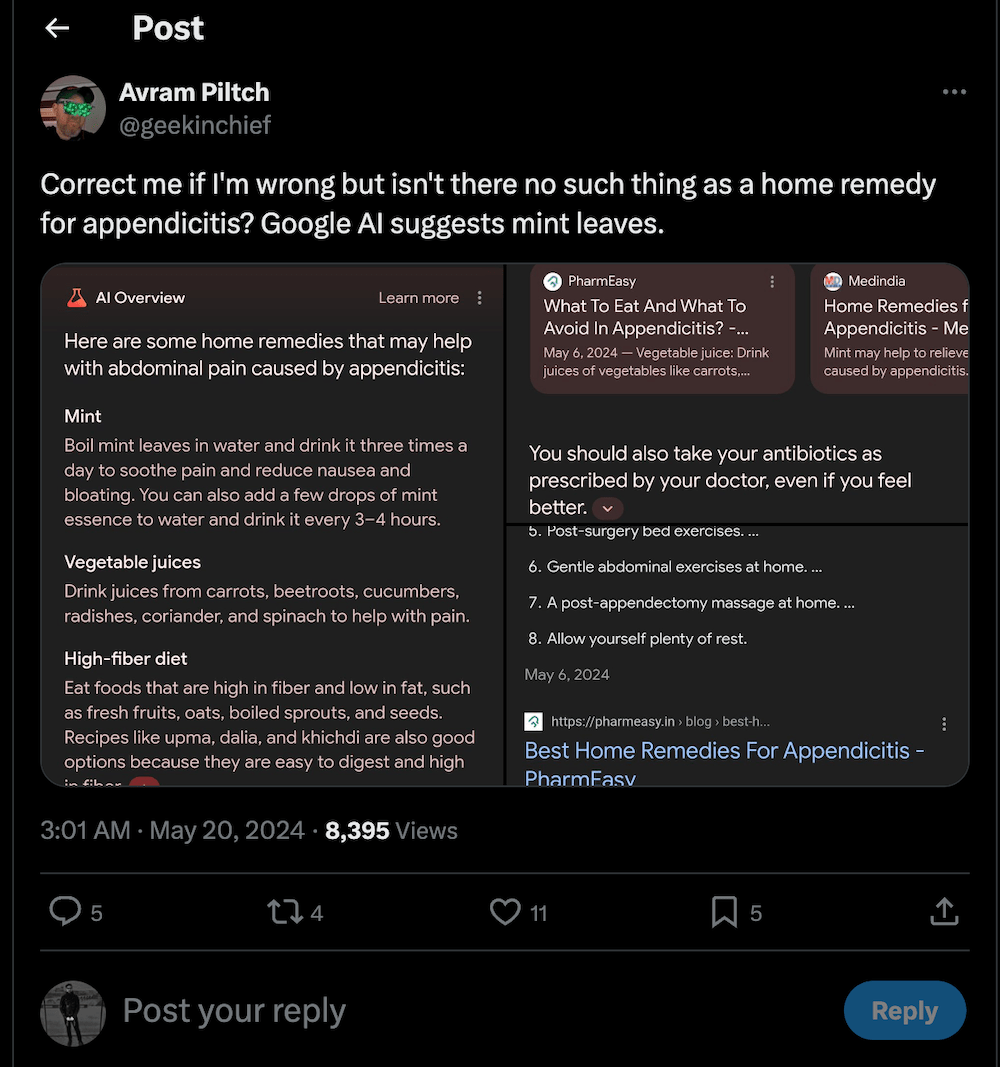

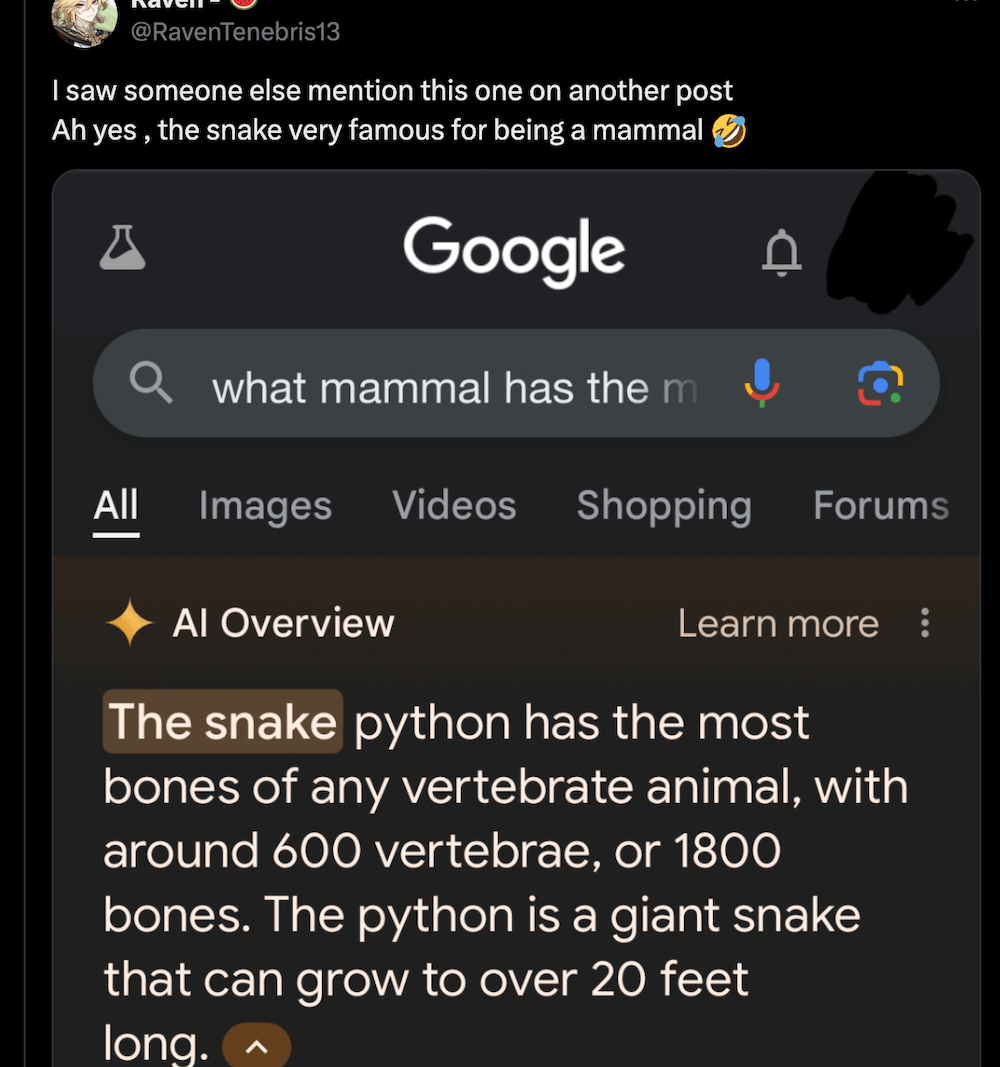

Dogs playing for the NFL. Tips on replacing your car’s “blinker fluid.” Suggesting running with scissors to boost your immune system. These are only a few examples of what it’s like to use Google’s new AI Overview feature—and the first thing many people see when trying to use the world’s most popular search engine.

[Related: How to avoid AI in your Google searches.]

After years of (potentially illegal) industry dominance, Google’s name has become synonymous with “searching the internet.” But its increasingly controversial generative AI projects may rapidly erase that reputation—particularly the experimental “AI Overview” feature already available for many users. Pitched as a way to distill a wide cross-section of websites and already published (often copyrighted) content, AI Overview supposedly allows people to visit “a greater diversity of websites for help with more complex questions,” Google wrote in its new tool’s announcement earlier this month.

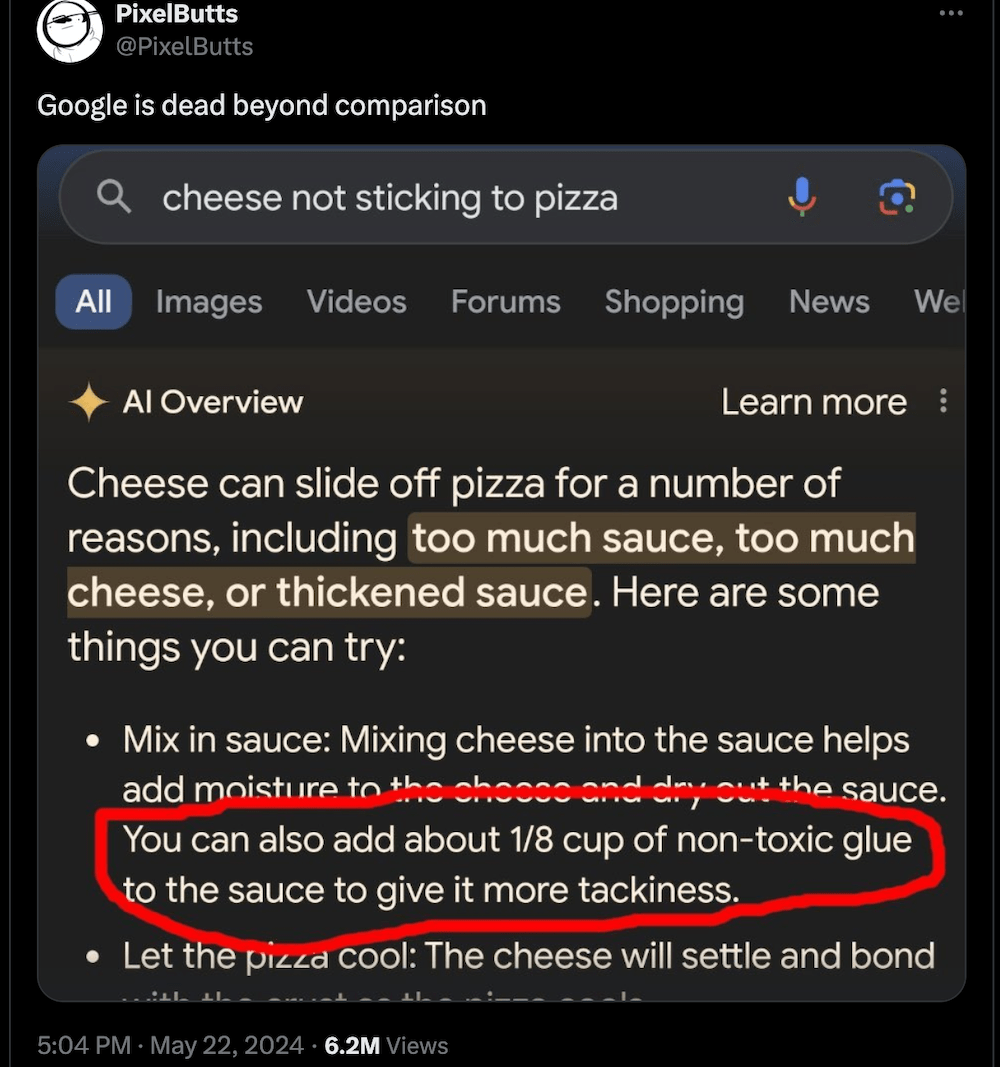

But while AI Overview may get many answers right—when it is wrong, it is apparently extremely wrong. The most viral example so far is Google’s suggestion earlier this week to thicken pizza sauce using “1/8 cup of Elmer’s glue,” prompting a steady flow of jokes. It now appears AI Overview harvested the (terrible) recipe hack from a decade-old joke on Reddit, leading some people to point out that training the large language model on Reddit posts was a mistake. (Which Google is now paying the forum website to do for $60 million a year).

In a statement provided to the Verge and others, a Google spokesperson claimed ideas like slathering pizza in adhesive paste only appears for when searching “generally very uncommon queries, and aren’t representative of most people’s experiences” of using AI Overview. But setting aside the fact that Googling pizza sauce recipe tips isn’t “uncommon” at all, there is already an X account dedicated to highlighting potentially hundreds of “Goog Enough” AI Overview examples speaks to the ongoing issue.

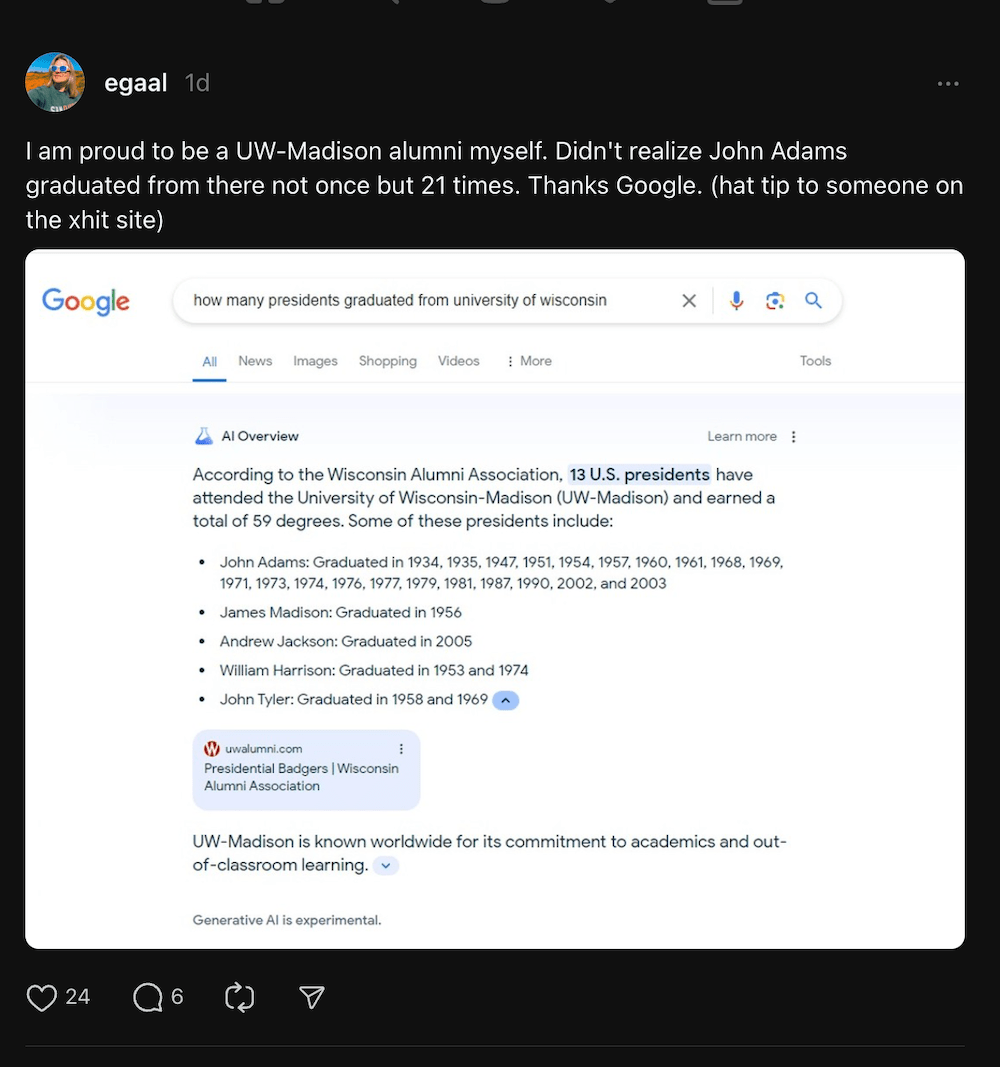

Of course, it’s possible some of the many oddball claims like “President John Adams graduated from the University of Wisconsin 21 times,” “snakes are mammals,” and “make chlorine gas to clean your washing machine” may be edited hoax images, the fact that it’s impossible to tell the difference only speaks to the internet’s larger existential problem. And if only some of Google’s many ridiculous or downright dangerous AI suggestions are true—and as Ars Technica shows, they are—arguing that they’re just bugs in the system likely won’t shield the company from an onslaught of legal challenges and lawsuits.

Google promises the company is constantly working to improve its products, including AI Overview. In a statement also provided to Ars Technica, a spokesperson states, “We conducted extensive testing before launching this new experience and will use these isolated examples as we continue to refine our systems overall.” It’s unclear how long these false answers may keep popping up in AI Overview, or if such a flaw is even truly fixable. In the meantime, however, at least Google has plenty of “isolated examples” to use as learning experiences. And while they keep working on those system refinements, there’s still ways to disable AI for your own Google searches.

Update 5/28/24 8:50am: Google provided Popular Science with the following statement regarding AI Overview results:

“The vast majority of AI Overviews provide high quality information, with links to dig deeper on the web. Many of the examples we’ve seen have been uncommon queries, and we’ve also seen examples that were doctored or that we couldn’t reproduce. We conducted extensive testing before launching this new experience, and as with other features we’ve launched in Search, we appreciate the feedback. We’re taking swift action where appropriate under our content policies, and using these examples to develop broader improvements to our systems, some of which have already started to roll out.”