By now you should’ve upgraded to iOS 26 on your iPhone, and the update is a big one. In addition to rolling out an entirely new look (called Liquid Glass), iOS 26 introduces a host of new and upgraded features, from a new battery saving mode to a mobile version of the classic Preview Mac app.

Another change ushered in by iOS 26 is the introduction of an expanded Visual Intelligence tool, part of Apple Intelligence. It leverages AI to analyze what’s on your phone screen, or what your camera is looking at—so you can use it to identify objects, for example, or pick out a date and time from a flyer and add it to your camera.

Because this is an Apple Intelligence feature, you need to have a phone that supports the AI: The iPhone 15 Pro or iPhone 15 Pro Max, any iPhone 16 model, any iPhone 17 model, or the iPhone Air will work. Assuming you have one of those models and have iOS 26 installed, we can dive into what Visual Intelligence is capable of.

Get information on screenshots

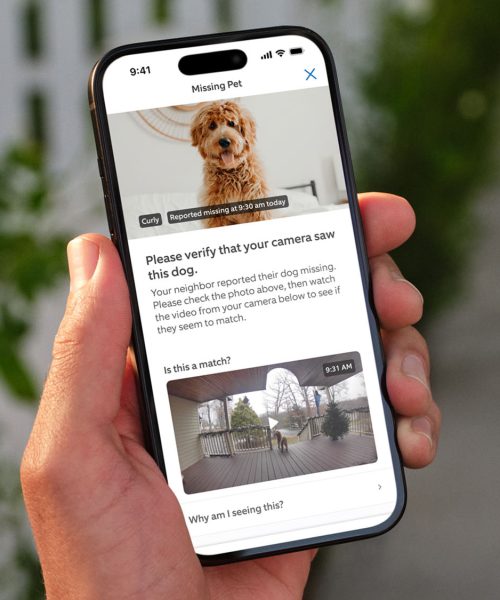

If you can screenshot something on your iPhone, you can get information from it with Visual Intelligence. Press the volume up button and the side button together, to take a screenshot, and your Visual Intelligence options appear along the bottom (though they may vary slightly depending on what’s on screen).

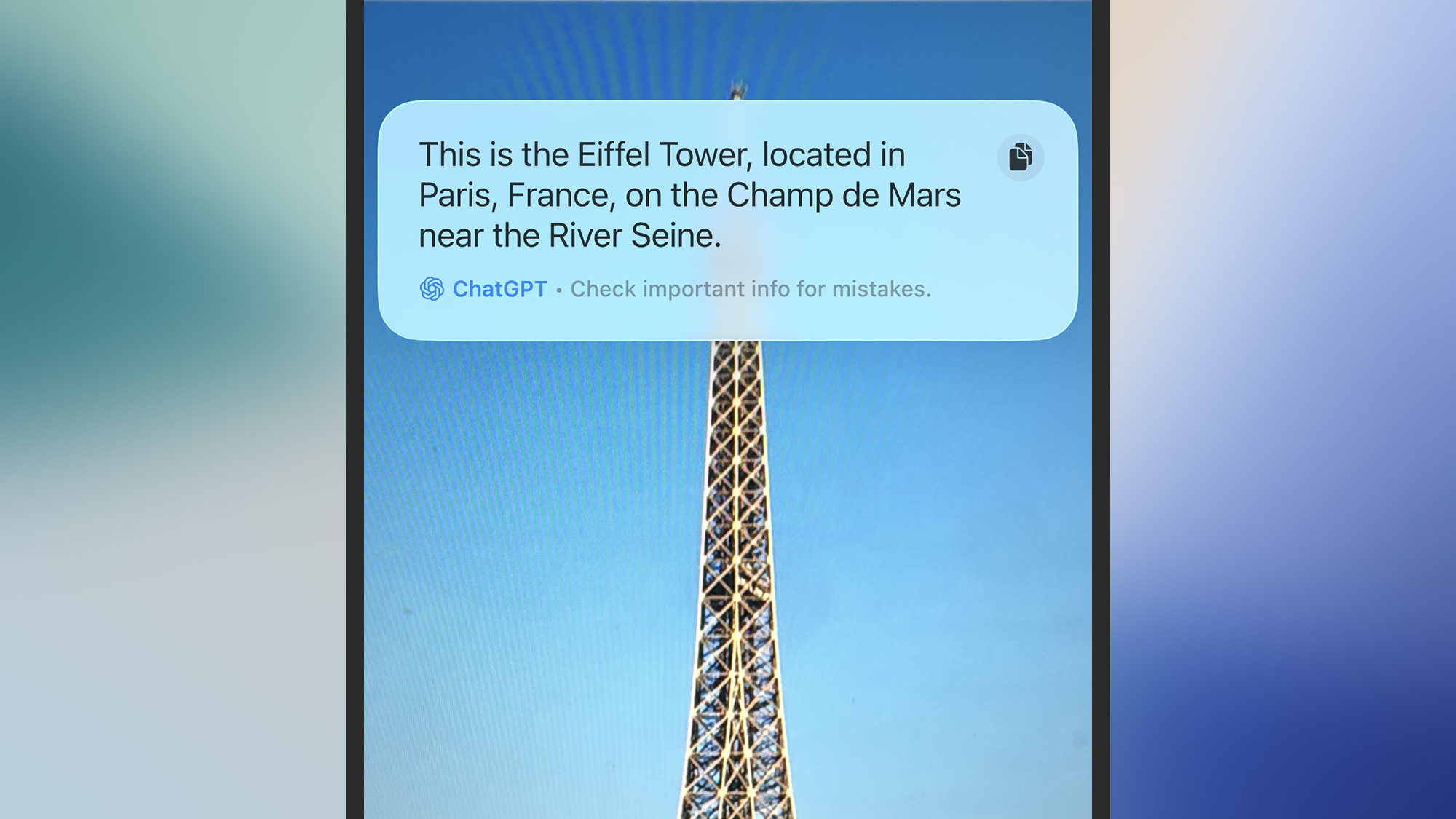

In the lower left corner you have an Ask button. Tap this to ask ChatGPT anything about what’s on screen, from a building captured in a photo to a settings screen in one of your apps. You can ask anything about your captured image, just as you would if you uploaded a picture inside the ChatGPT app.

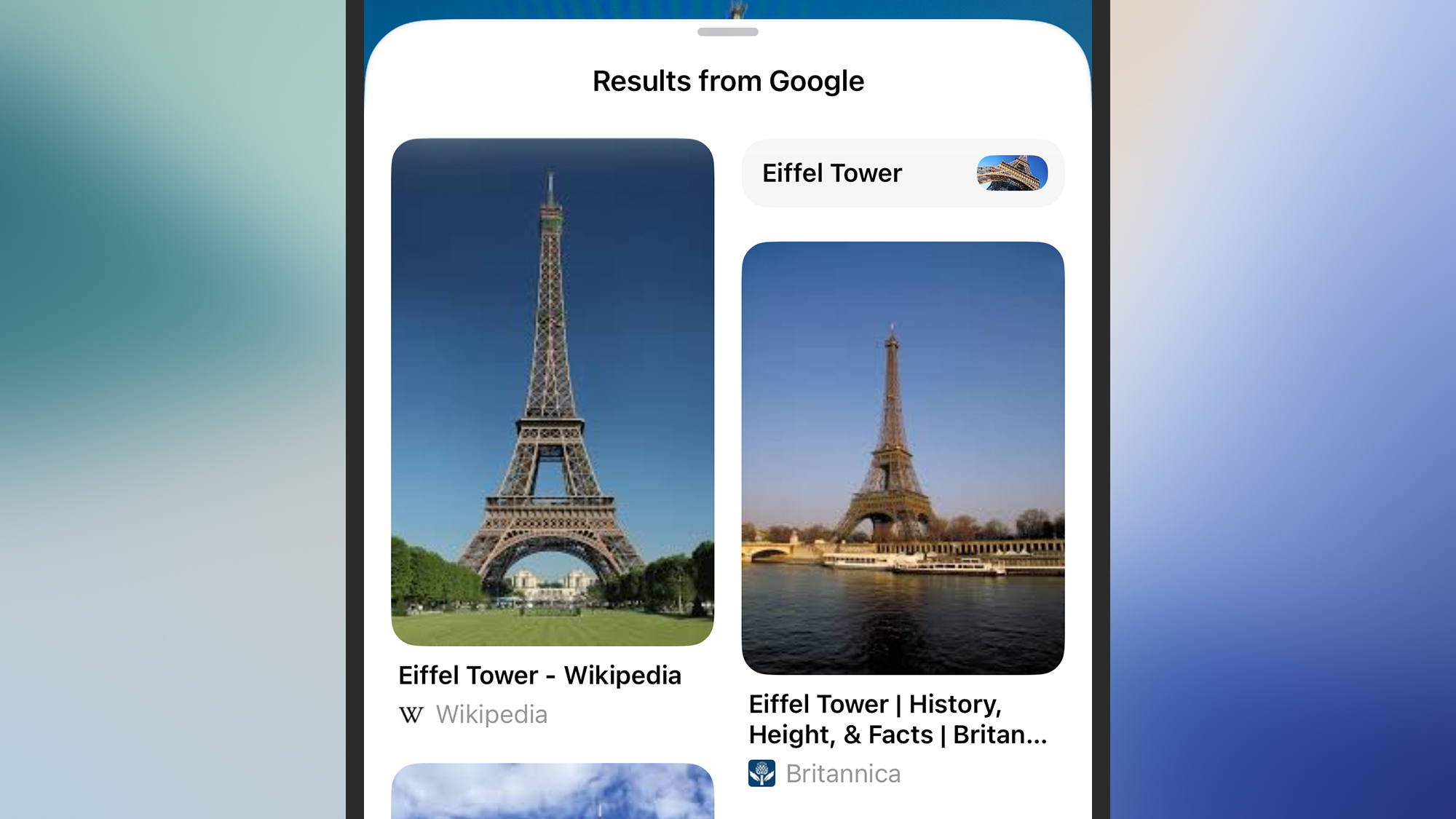

Then there’s the Search button in the lower right corner, which loads up a panel showing similar images that Google has found. If you want to be more precise, scribble with your finger on part of the image, and Google looks for visual matches to just that section of the screen. You can use this to find an online store selling something you’ve come across online, for example, or to identify an object or a person.

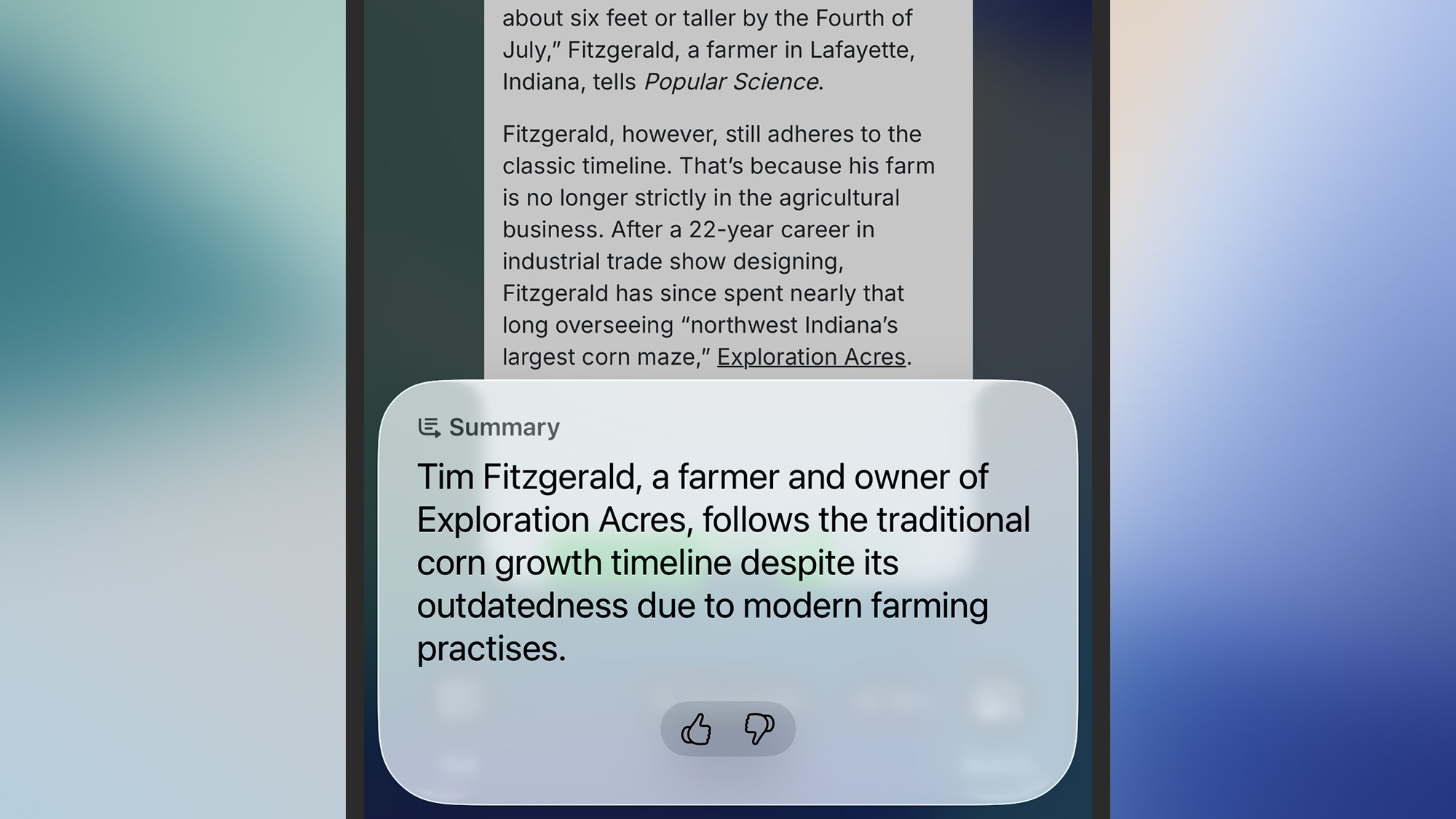

If Visual Intelligence recognizes a time and date in your screenshot (a poster for a gig perhaps), you’ll see an Add to Calendar button at the bottom which will do just that: Time, date, and location info can all be added, and you can modify the details if needed. You might also see buttons labeled Summarize (for summarizing blocks of text) or Look Up (for something that can be identified, like a company logo, landmark, or animal).

Sometimes Visual Intelligence will recognize something in a screenshot right away (such as a species of plant), and you can just tap on the label to get more information. Other options you might see depending on the content of the screenshot are Read Aloud (for having the text in the image read out to you), and direct links to websites that are somewhere in the information on screen.

When you’re done with your Visual Intelligence analysis, you can tap the checkmark (top right) to save the screenshot to your photo gallery, or the cross symbol (top left) to discard it. If you don’t like seeing the full-screen preview every time you take a screenshot, you can disable it via General > Screen Capture > Full-Screen Previews in Settings: That will revert you back to the old thumbnail approach, and you can still tap on the thumbnail to use Visual Intelligence.

Get information through your camera

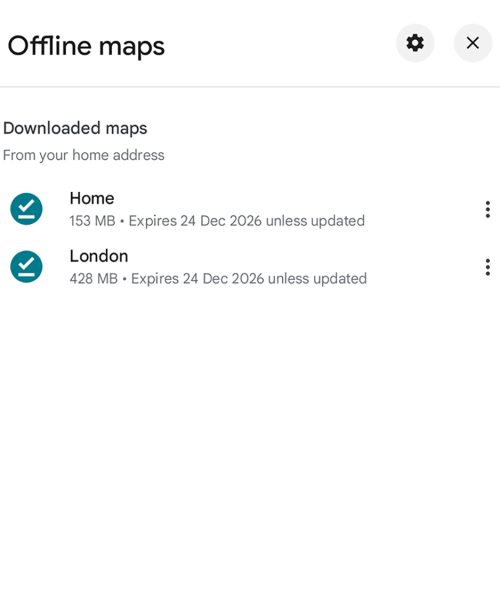

You can also use Visual Intelligence in real time through your iPhone camera—this functionality was actually introduced with iOS 18.2, and continues into iOS 26. It works a lot like the screenshot options we’ve already covered, and there is some overlap in the features, but in this case you can point the AI at anything in the world around you.

The easiest way to launch Visual Intelligence in camera mode is to press and hold the Camera Control button on your iPhone (on the lower right side as you look at it in portrait orientation). For models that don’t have a Camera Control—the iPhone 15 Pro, iPhone 15 Pro Max, and iPhone 16e—you can launch the feature via the Action Button or from the Visual Intelligence icon in the Control Center (it looks like the Apple Intelligence logo inside a frame).

If you don’t see the icon in the Control Center when you swipe down from the top right of the screen, tap the + (plus) button in the top left corner to add it. You’re also able to set up a Visual Intelligence shortcut from the lock screen, which you can do by pressing and holding your finger on the lock screen, then choosing Customize.

Once you’ve launched Visual Intelligence in camera mode, you can put it to work. There are three buttons along the bottom: Ask (for asking ChatGPT questions about what you’re looking at), a circular capture button (for taking a picture to analyze further), and Search (for running a Google search for images matching what you’re looking at).

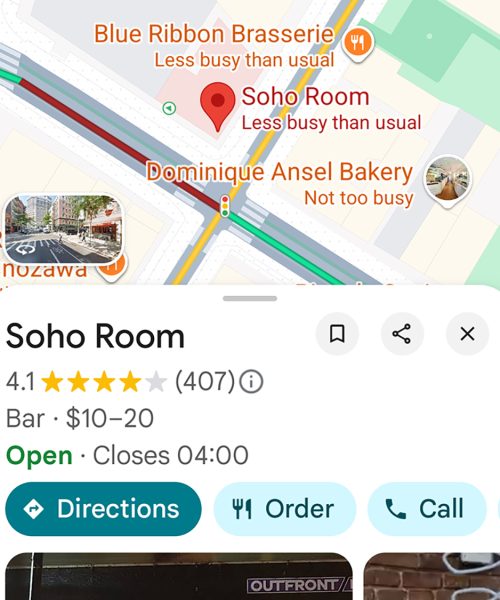

You could, for example, point your camera at the label of a wine bottle and ask ChatGPT to tell you more about it—including the foods it goes well with. Or you could run a Google search based on a bicycle you’ve spotted on the street, to find out how much the model costs and where you can buy it.

If you take a photo, you get a lot of the same options appearing on screen as mentioned above: You can have text summarized, translated, or read aloud, add events to your calendar, and look up more information on the web. Animals and plants can be identified, and you can also point your camera at a business on the street to get more details on it, including its contact number and opening hours. Tap the X cross symbol to dismiss a captured image from the screen.