Researchers from Columbia University have successfully developed an autonomous robot arm capable of learning new motions and adapting to damage simply by watching itself move. The robot observed a video of itself and then used that data to plan its next actions—a practice the researchers refer to as “kinematic self-awareness.” This unique learning process is designed to mimic the way humans adjust certain movements by watching themselves in a mirror.

Teaching robots to learn this way could reduce the need for extensive training in bespoke 3D simulations. It could also one day make future autonomous robots operating in the real world better equipped to adapt to damage and environmental changes without constant human intervention. The findings were published this week in the journal Nature Machine Intelligence.

Inspiration from babies and dancers looking at mirrors

Humans are one of a select few species of animals that have evolved to recognize our reflection and learn from it. Young children acknowledge their bizarre new bodies in mirrors or ponds, take notes about what they are seeing, and create a “mental model” of themselves in 3D space. That observation can help the development of movement, coordination, and early language skills. This process, which the researchers call “self-simulation” doesn’t just apply to children either. Dancers also regularly analyze their own reflections to correct their placement and receive instant visual feedback.

For this study, the researchers wanted to see if they could find a way to apply a similar self-simulation process to an autonomous robot. Normally, roboticists train AI models powering robots in highly detailed and curated virtual simulations. It’s only after copious rounds of training are complete that a model is typically then applied to a physical machine. But that process is limiting in multiple ways. For starters, advanced simulations can take a long time to perfect and often require extensive engineering expertise. They are also somewhat restrained in terms of adaptability. If something unexpected happens to the robot in a physical world that’s outside of the parameters it was trained on in simulation, it might have trouble properly responding.

“Our goal is a robot that understands its own body, adapts to damage, and learns new skills without constant human programming,” Columbia University doctoral student and paper lead author Yuhang Hu said in a statement.

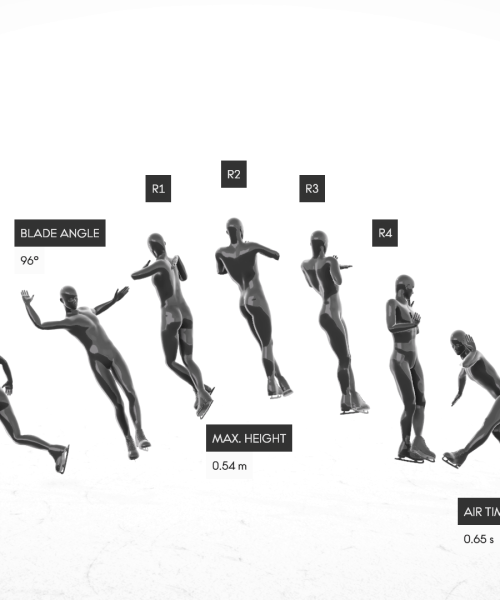

To do that, the researchers developed a new “self-supervised learning framework” consisting of three separate deep neural networks. A coordinate encoder first processes a raw video of the robot captured by a single camera, a process the researchers liken to a human looking at themselves in a mirror. A kinematic encoder then converts that image—which includes data highlighting the robot’s joints and other areas of movement—into a binary image. Finally, the predictive model completes the process by instructing the physical robot arm on how to move in the real world. The result is a robot that can continuously adjust its movements based on video data from a camera rather than relying on a virtual training simulation.

Robot arm was able to adapt to damage on the fly

The researchers tested their new learning framework by putting their robot arm through a few basic tasks. First, they demonstrated that the arm could avoid an obstacle (in this case, a cardboard-like divider) simply by watching a video frame of itself successfully swinging past the barrier. More interestingly, they also showed how the same model could help the robot adapt to damage. Researchers wanted to see how the robot would respond in a hypothetical scenario where an overly heavy load bent its limb. To test this, they 3D-printed a damaged limb and attached it to the robot. After watching a video of itself with the new arm, the model was able to refine its predictions and adjust the robot’s movements to compensate for the simulated damage.

“Our results demonstrate that this self-learned simulation not only enables accurate motion planning but also allows the robot to detect abnormalities and recover from damage,” the researchers wrote in a preprint version of the paper.

Self-simulation could help future autonomous robots operate with less human intervention

The self-simulation method demonstrated in the paper could help improve a wide range of future autonomous robots that may one day be deployed for various tasks, from manufacturing work to environmental and industrial monitoring. The ability of these robots to learn from camera data and even adapt to damage could reduce downtime and prevent human repair workers from being unnecessarily exposed to potentially hazardous conditions. Self-repairing capabilities could become even more important in areas like robot-assisted elder and child care, where damage to the machines could have an immediate impact on a person’s well-being.

There are also more mundane advantages to this approach. The researchers note that a self-repairing vacuum robot, for instance, could theoretically use these techniques to adjust its movement after noticing it had bumped into a wall and knocked a limb slightly out of place. In other worlds, clumsy Roombas barreling through cluttered apartments might one day stand more of a fighting chance.

“We humans cannot afford to constantly baby these robots, repair broken parts and adjust performance,” Columbia Professor and paper co-author Hod Lipson said. “Robots need to learn to take care of themselves.”