AI-generated deepfake videos depicting humans are getting more advanced, and more common, by the day. The most sophisticated tools can now produce manipulated content that is indistinguishable to the average human observer. Deepfake detectors, which use their own AI models to analyze video clips, attempt to bypass this deception by searching for hidden tells. One of those is the presence of a human pulse. In the past, AI models that detected a noticeable pulse or heart rate could confidently classify those clips as genuine. But that may no longer be the case.

Researchers from Humboldt University of Berlin published a study this week in the journal Frontiers in Imaging in which they created deepfake videos of people that appeared to show human-like pulses. Deepfake detectors trained to use pulses as a marker of authenticity incorrectly classified these manipulated videos as real. The findings suggest that heart rate and pulse—once considered relatively reliable indicators of authenticity—may no longer hold up against the most advanced generative AI deepfake models. In other words, the constant cat-and-mouse game between deepfake creators and detectors may be tipping in favor of the deceivers.

“Here we show for the first time that recent high-quality deepfake videos can feature a realistic heartbeat and minute changes in the color of the face, which makes them much harder to detect,” Humboldt University of Berlin professor and study corresponding author Peter Eisert said in a statement.

How deepfakes work and why they are dangerous

The term deepfake broadly refers to an AI technique that uses deep learning to manipulate media files. Deepfakes can be used to generate images, video, and audio with varying degrees of realism. While some use cases may be relatively harmless, the technology has rapidly gained notoriety for fueling a surge in non-consensual sexual imagery. An independent researcher speaking with Wired in 2023 estimated that around 244,625 manipulated videos were uploaded to the top 35 deepfake porn websites over just a seven-day period. The recent rise of so-called “nudify” smartphone apps has further amplified the problem, enabling people with no technical expertise to insert someone’s face into sexually explicit images with the click of a button.

There are also concerns about other examples of deepfakes, particularly audio and video versions, being used to trick people into falling for financial scams. Others fear the technology could be used to create convincing copies of lawmakers and other powerful figures to spread misinformation. Deepfakes have already proliferated portraying presidents Barack Obama and Donald Trump, among many other public figures. Congress just this week passed a controversial new bill called the Take It Down Act that would criminalize posting and sharing nonconsensual sexual images including those generated using AI.

Related: [Spreading AI-generated content could lead to expensive fines]

How deepfake detectors scan videos for a pulse

But any efforts to meaningfully combat deepfakes requires a robust system to accurately separate them from genuine material. In the past, deepfake videos were often recognizable even to casual viewers because they contained tell-tale signs—or “artifacts”—such as unnatural eyelid movements or distortions around the edges of the face. As deepfake AI models have progressed and improved, however, researchers have had to develop more sophisticated methods for spotting fakes. Since at least 2020, one of those techniques has involved using remote photoplethysmography (rPPG)—a method best known for its use in telehealth to measure human vital signs—to detect signs of a pulse. Until now, it was widely assumed that AI-generated images of humans, convincing though they might be, would not exhibit a detectable pulse.

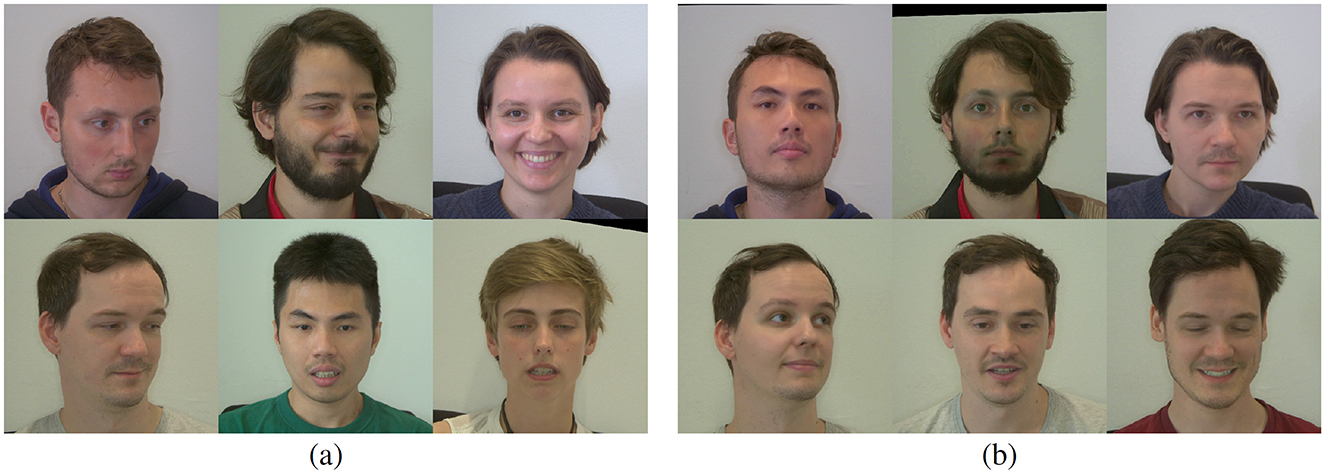

Researchers at Humboldt University wanted to see whether those assumptions still held true when tested against some of the most up-to-date deepfake techniques. To test their hypothesis, they first developed a deepfake detection system designed specifically to analyze videos for signs of heart rate and pulse. They trained it using video data collected from human participants who were asked to engage in a range of activities such as talking, reading, and interacting with the recording supervisor, all of which produced varied facial expressions. The researchers found that their model was able to accurately identify individuals’ heart rates after being trained on just 10 seconds of video data.

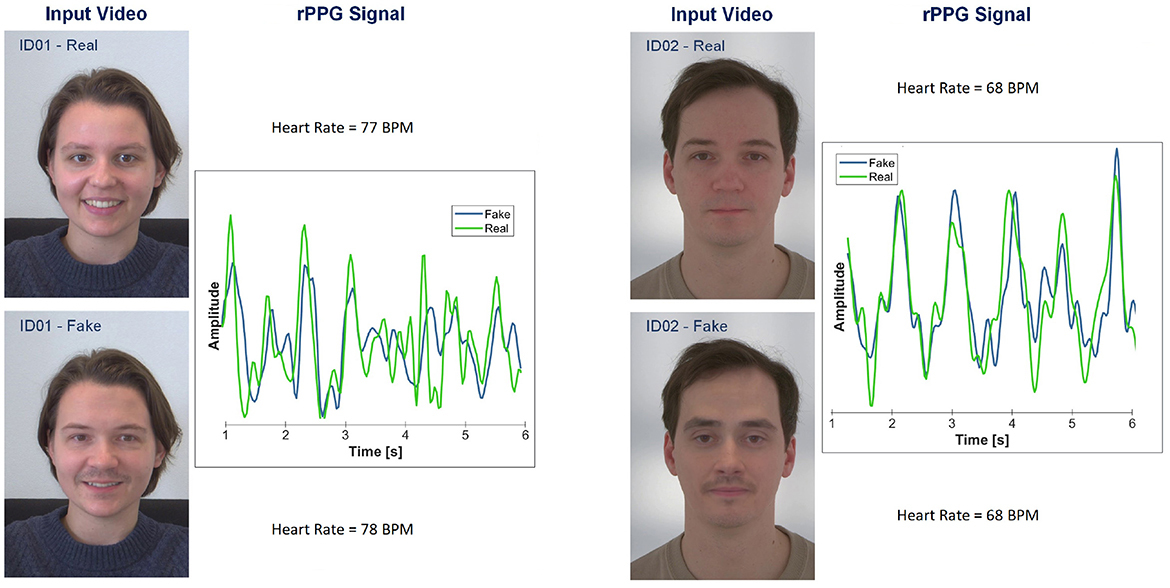

With that baseline set, the researchers then repeated the test, this time using AI-generated videos of the same human participants. In total, they created 32 deepfake videos, all of which appeared authentic to the human eye. Although the researchers expected these manipulated videos to be flagged by the detector for lacking a pulse, the exact opposite happened. The detector registered heartbeats that shouldn’t have been present and incorrectly concluded that the videos depicted real humans.

“Our experiments demonstrated that deepfakes can exhibit realistic heart rates, contradicting previous findings,” the researchers write.

Detectors may need to adapt to a new reality

The researchers say the findings point to a potential vulnerability in modern deepfake detectors that could be exploited by bad actors. In theory, they note, deepfake generators could “insert” signs of heartbeats into manipulated videos to fool detection systems. It’s worth noting though that this wasn’t the case in the current study. Instead, the deepfake videos appeared to have “inherited” the heartbeat signals from the original videos they were based on. It’s not exactly clear how that inheritance occurred. A figure included in the paper showing heat maps of both the original human videos and the deepfakes show similar variations in transmission of light through skin and blood vessels. Some of these changes, referred to the research as a “signal trace” are nearly identical, which suggest they were carried over from the original video into the new deepfake. All of this suggests more advanced models are able to replicate those minute sensations in their fakeries, something that wasn’t possible with deepfake tools of a few years ago.

“Small variations in skin tone of the real person get transferred to the deepfake together with facial motion, so that the original pulse is replicated in the fake video,” Eisert said.

These results, though significant, don’t necessarily mean that efforts to effectively mitigate deepfakes are futile. The researchers note that while today’s advanced deepfake tools may simulate a realistic heartbeat, they still don’t consistently depict natural variations in blood flow across space and time within the face. Elsewhere, some commercial deepfake detectors are already using more granular metrics—such as measuring variations in pixel brightness—that don’t rely on physiological characteristics at all. Major tech companies like Adobe and Google are also developing digital watermarking systems for images and videos to help track whether content has been manipulated using AI. Still, this week’s findings underscore how the rapid evolution of deepfake technology means those working to detect it can’t afford to rely on any single method for long.